Hybrid search is a multi-faceted vector search that increases the relevancy of search results by combining two powerful search methods: the precision of keyword-based sparse vector search, and the contextual understanding of semantic dense vector search. Hybrid search is advantageous over pure semantic search in scenarios where contextual specificity is critical, for example to ensure that domain specific terms are considered and weighted appropriately.

Sparse vs. Dense Vectors

Let’s understand the two types of vectors needed to perform a hybrid search:

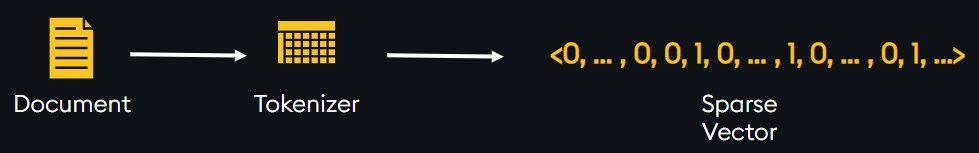

Sparse Vectors: High dimensional vectors that contain mostly zero values, highlighting a few non-zero elements. Sparse vectors are created by passing a document through a tokenizer. A tokenizer splits raw text into words or sub-words and then maps these units to numerical values called tokens. Each unique word in a document will now have an associated token. These tokens, and a count of how many times each token appears in the document, are used to build a sparse vector for that document.

Dense Vectors: High dimensional vectors containing mostly non-zero values. These vectors represent the semantic meaning, relationships, and attributes within the document. Dense vectors are created by passing a document into an embedding model, producing a vector of numeric values that represent the original data.

Hybrid Search Architecture

With KDB.AI, users have the option to perform either a dense or sparse vector search independently, or they can opt to create a hybrid search index which will return results based on both approaches. Hybrid search then combines and re-ranks the results based on a user set weight values that weights the importance of each search type.

Dense vector searches have a variety of configurable options such as index type (flat, HNSW, IVF, IVFPQ), number of dimensions, and search methods (cosine similarity, dot product, Euclidean distance). The dense similarity search is used to find the most relevant results from a semantic perspective.

Sparse vector searches utilize the BM25 algorithm to find the most relevant keyword matches by performing advanced string matching. BM25 considers the number of keyword matches, term frequency, and term rarity to identify relevant text documents. A key benefit of the way KDB.AI implements sparse search is that the developer can tune the ‘k’ and ‘b’ parameters of the BM25 algorithm at run time by passing them in as search parameters. This gives flexibility when tuning sparse search to your use-case for term saturation and document length impact on relevance. Additionally, unique to KDB.AI, upon insertion of new data into the sparse column, underlying BM25 statistics are updated. This ensures that scoring is up to date and aligns with the current sparse data during BM25 search.

After conducting the hybrid search, the relative importance of sparse and dense search results can be finely tuned to suit the requirements of the query. In KDB.AI, this customization is facilitated by the weight parameter, adjustable between 0 and 1, which allows for precise weighting between the two search techniques.

Hybrid Search Implementation

To implement a hybrid search, first create sparse and dense vectors representing your data. Note: the number of sparse vectors and dense vectors must match.

See full notebook here: LINK

For sparse vectors, a tokenizer like “BertTokenizerFast” from the Hugging Face transformers library will tokenize a document. The sparse vector must be formatted as a dictionary of key:value pairs where the key is a token that represents a unique term in the document, the value is a count of how many times that term appears in the document.

For dense vectors, an embedding model like ‘all-MiniLM-L6-v2” from sentence_transformers, also available through Hugging Face, will embed a text document. Keep in mind to note the number of dimensions that an embedding model outputs, as it will be used to define a KDB.AI table in the next step.

To define a table in KDB.AI capable of hybrid search, we must define columns for both sparse and dense vector embeddings:

schema = [

{"name": "ID", "type": "str"},

{"name": "chunk", "type": "str"},

{

"name": "sparse",

"type": "general",

},

{

"name": "dense",

"type": "float64s",

},

]

#Define the indexes

indexes = [

{

'type': 'flat',

'name': 'dense_index',

'column': 'dense'

'params': {'dims': 384, 'metric': "L2"},

},

{

'type': 'bm25',

'name': 'sparse_index',

'column': 'sparse',

'params': {'k': 1.25, 'b': 0.75},

},

]Let’s break down the schema for understanding (See technical documentation here):

Column 1: ID – This column will hold a unique ID for each chunk.

Column 2: Chunk – This column will hold the raw text of each chunk for reference.

Column 3: sparse – The sparse column holds sparse vectors.

Column 4: dense – The dense column holds dense vectors, which are created from passing raw text into an embedding model.

Next, let’s break down the indexes we define to perform a hybrid search:

In this example, we have two indexes, one for dense search and one for sparse search (bm25). When defining the indexes, we specify the index type, give the index a name, specify the schema column that the index will be applied to, and set any additional parameters including dimensions, search metric, ‘k’, and ‘b’.

dense_index: Uses a flat index type, with 384 dims and Euclidean Distance search metric

sparse_index: Uses bm25 search type. We also define the “b” and “k” parameters. These parameters can be adjusted at runtime, enabling the hyperparameter tuning for term saturation and document length impact on relevance.

With each of these columns and indexes defined, you can create a KDB.AI table that has the flexibility to ingest both sparse and dense vectors, and run a dense search, a sparse search, or a hybrid search.

A bit more on ‘b’ and ‘k’:

- “b”: Document length impact on relevance. A numeric value between 0 and 1 (inclusive). In general, documents covering multiple topics at a higher level should use a higher value of b. Documents covering a specific topic should use a lower value of b.

- “k”: Term saturation. A numeric value between 0 and 3 (inclusive). How much more relevant do additional instances of a term make a document – the lower k, the faster term saturation occurs, and the less weight additional terms have.

When performing a hybrid search, the user’s prompt is converted to both a sparse and a dense query vector using the same models that were used to produce stored embeddings.

These embeddings are then passed as parameters to the hybrid search. We also pass ‘index_params’ into the search, this is where the weighting for the sparse and dense searches are set (in this case, they are evenly weighted 50/50).

table.search(

vectors={"sparse_index": sparse_query, "dense_index": dense_query},

index_params={

"sparse_index": {'weight':0.5}

"dense_index": {'weight': 0.5}

},

n=5

)It is worth noting that whilst it is common practice to use the same prompt to generate both sparse and dense vectors, KDB.AI hybrid search also allows for different values to be used thus supporting a wider range of use cases.

Hybrid Search Use Cases

- Legal Document Search:

Offering law firms and legal departments the ability to sift through vast databases of legal documents by matching specific legal phrases or citations while also considering the broader context and meaning of the search query, enhancing the efficiency of legal research.

- Human Resources and Recruitment:

Improving candidate search and job matching in recruitment platforms by aligning candidates’ resumes and job descriptions not only based on specific skills or titles but also on the semantic meaning of their experiences and job requirements.

- Content Recommendation Systems:

Creating more sophisticated recommendation engines for media streaming platforms, news aggregators, and social media sites, where content is matched to user preferences and behaviors using a blend of semantic understanding and specific term matching.

- Customer Support Automation:

Enhancing chatbots and virtual assistants with the ability to understand and retrieve information from a knowledge base more accurately, providing users with relevant answers and support documentation based on both explicit queries and the underlying context of their questions.

Hybrid Search with Temporal Similarity Search

Hybrid search offers a number of search combinations in addition to sparse and dense vectors. Sparse vector search can be combined with both Transformed and Non-Transformed Temporal Similarity Search. Hybrid search with sparse and temporal vectors will add additional insights to identifying trends and patterns within time series datasets.

Wrapping Up

Hybrid search blends the precision of sparse vector search with the contextual depth of dense vector search to significantly enhance result relevancy across your applications. Its flexible architecture allows for tailored weighting between search types, offering application-based customization. Hybrid search will help your business unlock new insights and efficiencies in your vector database powered AI applications.

Check out the documentation to learn more and get started with KDB.AI hybrid search today.